Step-by-Step Guide to Creating a Generative Audio Model

May 22, 2025

The world of audio technology has evolved rapidly, with AI and machine learning taking center stage in reshaping how we generate and interact with sound. According to a recent report, the global AI in audio market size is projected to reach USD 3.7 billion by 2027, growing at a CAGR of 25.1%. This surge is largely attributed to innovations in AI Audio Generation and Generative Audio Models, enabling automated, high-quality sound generation for a variety of applications.

Industries such as music production, virtual assistants, gaming, film production, and assistive technology stand to benefit the most from these advancements. Generative Audio Models can significantly streamline the audio creation process, offering enhanced creativity, efficiency, and personalization.

In this blog, we’ll explore how to create a Generative Audio Model, using cutting-edge techniques like Deep Learning for Audio and Neural Networks for Sound. This will serve as your step-by-step guide to building an AI audio generation model, helping you understand how to harness AI’s potential to create sound and music with unprecedented quality and creativity.

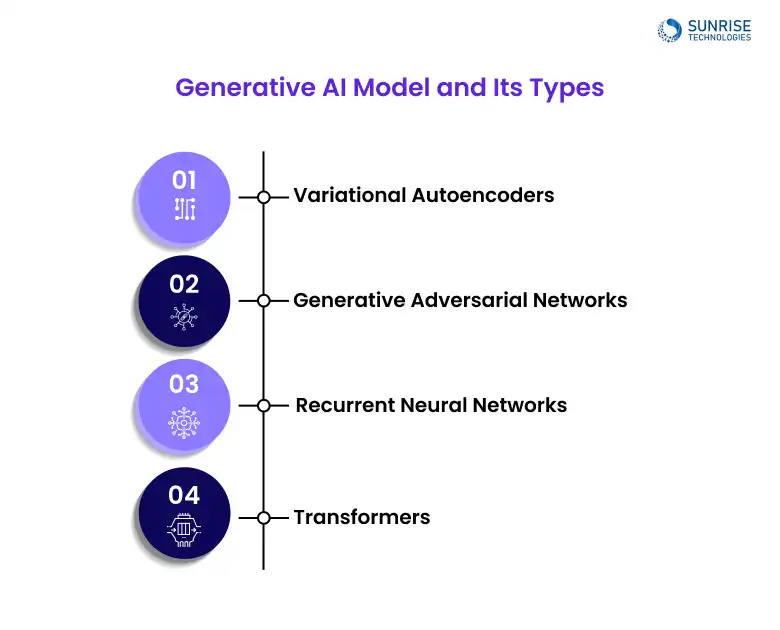

Generative AI Model and Its Types

Generative AI models are a category of algorithms designed to create new data based on learned patterns from existing data. These models can generate realistic audio, from speech, music, or sound effects. Here’s a quick look at the different types of Generative AI Models commonly used in AI Audio Generation:

VAEs are great for capturing the latent structure of audio data and producing smooth, coherent outputs.

- Generate continuous and natural-sounding audio, ideal for ambient music or background effects.

- Often used in AI voice synthesis where subtle variations matter.

- Offer a compact, noise-tolerant encoding-decoding mechanism, useful in audio synthesis techniques.

Known for their dual-network structure, GANs are widely adopted in music generation AI and sound realism tasks.

- The generator creates fake audio; the discriminator judges its realism.

- Ideal for realistic music and speech synthesis with complex textures.

- Heavily used in audio data generation using generative AI models for sound design.

RNNs handle sequential audio inputs, making them effective for structured, time-based outputs.

- Best for tasks involving temporal dynamics like melody or spoken word.

- Capture short-term memory efficiently for repetitive, loop-based music generation.

- Heavily used in audio data generation using generative AI models for sound design.

Transformers excel in managing long sequences and understanding global context, perfect for audio with multiple overlapping layers.

- Useful in step-by-step guide to training AI for music generation where context matters across long audio segments.

- Provide state-of-the-art results in neural networks for sound and lyrics generation.

- Lead to breakthroughs in complex music composition and adaptive soundtracks.

What is Generative Audio Models?

A Generative Audio Model refers to an AI system trained to generate new audio content. These models learn patterns from an extensive dataset of sound and music, then produce unique, often indistinguishable audio content.

Generative audio models are used in several domains, such as AI voice synthesis for virtual assistants and content creators, or music generation AI tools for composers and producers. By leveraging deep learning for audio, these models can generate speech, music, and sound effects at a level that was previously unthinkable.

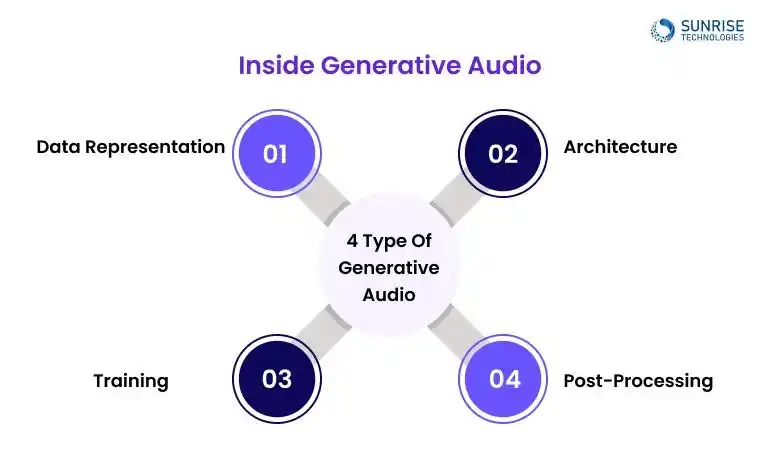

How Generative Audio Models Work: The Technical Core

So, how does an AI audio model go from silence to generating stunning soundtracks, lifelike voices, or ambient audio?, and it’s deep learning doing its thing behind the scenes. Let’s walk through the process of how to create a generative audio model with deep learning, step by step:

Audio is converted into machine-understandable formats, such as spectrograms or Mel-frequency cepstral coefficients (MFCCs). These representations capture the essential characteristics of sound, making it easier for models to learn patterns like pitch and rhythm.

Different neural network architectures are used to generate audio:

- WaveNet: An autoregressive model that generates audio frame-by-frame, creating highly realistic sound.

- GANs (Generative Adversarial Networks): These consist of a generator and a discriminator, working together to produce lifelike audio. Wasserstein GANs help improve quality by stabilizing training.

The model is trained to minimize the difference between real and generated audio using loss functions like Wasserstein loss in GANs. Over time, it learns the patterns in the data, improving its ability to generate realistic sounds.

Generated audio often requires post-processing to be converted into playable sound. Techniques like Griffin-Lim help reconstruct waveforms from spectrograms, ensuring the final output is clear and usable.

Read Also: How Businesses Are Using Generative AI to Innovate in 2025

Benefits of Generative Audio Models:

AI-driven audio generation can significantly reduce the time spent on producing sound effects or music tracks. Instead of starting from scratch, creators can leverage AI to generate content faster.

Traditional audio production, especially for things like soundtracks or voiceovers, can be costly. With AI audio generation, businesses can reduce the need for expensive studio time or hiring voice actors.

Generative audio models enable hyper-personalized audio experiences, such as customized music tracks for individuals or even tailored AI voice synthesis for customer service applications.

By using AI to generate new audio content, creators can explore endless possibilities and push the boundaries of sound design and composition.

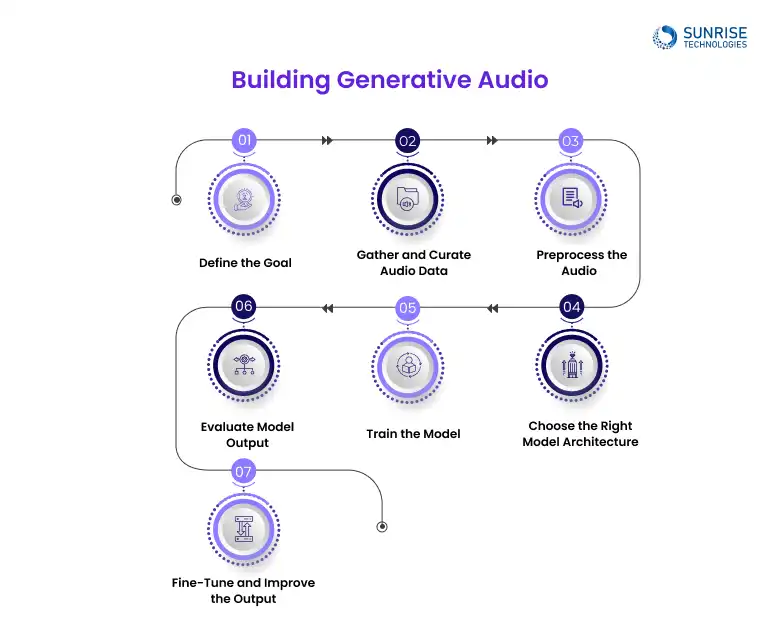

How to Build a Generative Audio Model from Scratch

Creating a Generative Audio Model requires a structured blend of domain-specific data, deep learning algorithms, and the right model architecture. With the right process and tools, anyone, from machine learning engineers to creative technologists, can build a fully functional AI audio generation model.

Here’s a step-by-step approach to bring your model to life:

Clearly identifying the use case helps shape your dataset and model architecture. For AI voice synthesis or music composition, your objective influences every decision ahead.

- For AI voice synthesis, define parameters like emotion, pitch control, speaker variation, and naturalness.

- For music generation AI, decide on genres, instruments, style (melodic vs. harmonic), and whether you want real-time generation or batch synthesis.

- Understanding your target output (speech, music, ambient sound) aligns the technical workflow from the start.

The success of an AI audio generation model heavily depends on the quality and diversity of its training data. Audio must be rich in variation and annotated properly for better learning outcomes.

- Collect domain-specific data: MIDI files for music, and multilingual voice clips for speech synthesis.

- Ensure a wide range of attributes like accents, tones, instruments, tempos, to improve generalization.

- Prefer open datasets like LibriSpeech (speech) or NSynth (music) for robust modeling.

Raw audio data needs to be transformed into meaningful input formats for neural networks. This step involves feature extraction and noise reduction to preserve important acoustic characteristics.

- Convert audio into spectrograms, Mel spectrograms, or MFCCs (Mel-frequency cepstral coefficients) for better time-frequency representation.

- Apply trimming, silence removal, and segmentation to ensure uniformity in training samples.

- Prefer open datasets like LibriSpeech (speech) or NSynth (music) for robust modeling.

Model architecture determines how well your system captures temporal and spectral patterns. Choosing the right structure is crucial for tasks like music generation, AI or neural voice cloning.

- GANs (Generative Adversarial Networks) excel at generating realistic and complex waveforms, ideal for music.

- VAEs (Variational Autoencoders) offer controlled generation through latent variables, suitable for diverse voice synthesis.

- RNNs, GRUs, and Transformers are powerful for sequential data modeling, capturing long-term dependencies in audio.

Training an AI audio generation model involves optimizing weights through gradient descent and loss functions. This step consumes significant computational resources and demands fine-tuning.

- Use frameworks like TensorFlow, PyTorch, or JAX to build, train, and deploy your model.

- Choose a loss function: Mean Squared Error (MSE) for regression, or adversarial loss in GANs for more realistic output.

- Implement learning rate schedules, batch normalization, and dropout to stabilize training.

Evaluating the quality of generated audio ensures that your model isn’t just producing noise. Use both objective metrics and human evaluation to validate realism and fidelity.

- For speech synthesis, assess intelligibility using MOS (Mean Opinion Score) and PESQ (Perceptual Evaluation of Speech Quality).

- For music generation AI, evaluate harmonic consistency, rhythm patterns, and instrument realism.

- Track metrics like spectral convergence, pitch accuracy, and log-likelihood over test samples.

Fine-tuning helps refine the AI audio generation model by optimizing specific elements of the output. Techniques like transfer learning and hyperparameter tuning help boost performance further.

- Adjust learning rates, model depth, and activation functions for better training outcomes.

- Apply transfer learning using pre-trained audio models like MelGAN or WaveNet for faster convergence.

- Use curriculum learning, start with simple patterns, then progressively introduce complex audio structures.

Partner with Sunrise Technologies, your trusted AI development team. From data preprocessing to model tuning, we’ve got you covered.

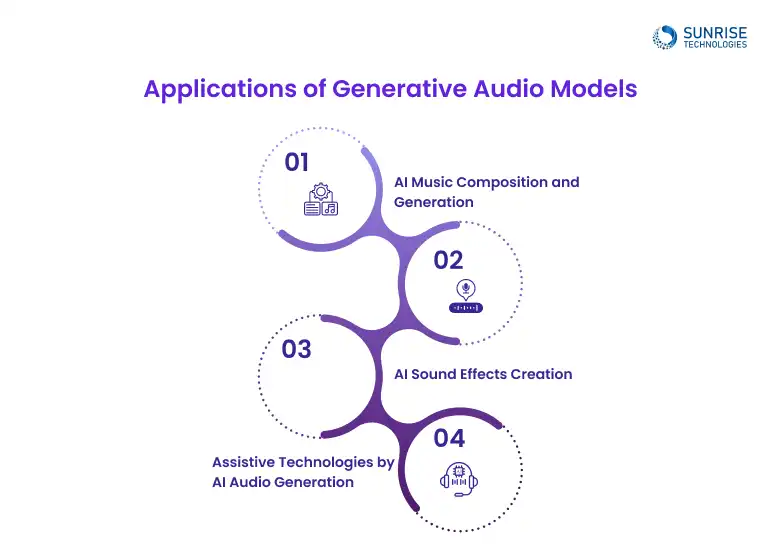

Applications of Generative Audio Models

From redefining music creation to enabling assistive communication, there are the vast of applications of generative audio models in industries. These AI audio generation tools combine deep learning with audio engineering to generate new, realistic, and personalized sound content.

Generative audio models are transforming the creative process for composers, producers, and hobbyists by autonomously generating musical patterns, harmonies, and rhythms. This is a leading use case of music generation AI in modern content creation.

- Models like MuseNet and Jukebox use deep neural networks to generate polyphonic music with complex temporal dependencies.

- Musicians use these tools to co-create ideas, explore new genres, or even complete unfinished tracks.

- Enables royalty-free music creation at scale for content creators and indie developers.

So, what is the best model for AI voice synthesis? It just arrives as the first question as it leverages models trained on real human speech to generate lifelike voices, useful in everything from voice assistants to audiobook narration.

- Tools like Tacotron 2, FastSpeech, and WaveNet generate smooth, human-like intonations and prosody.

- Widely used in virtual assistants, call center bots, and personalized digital avatars.

- Supports multilingual synthesis, speaker adaptation, and emotion modeling for dynamic conversations.

For game developers and sound designers, generative audio models can automate the production of sound effects that traditionally require extensive foley work or sampling.

- Deep learning models trained on environmental and action-based sounds can generate unique effects like explosions, footsteps, or wind.

- Reduces the manual recording workload and allows dynamic sound effect generation in real-time environments (e.g., adaptive game scenes).

- Enhances productivity in post-production for animation, gaming, and VFX.

One of the most impactful applications is in assistive technologies, where AI audio generation gives a voice to those who are non-verbal or have speech impairments.

- Personalized text-to-speech (TTS) systems can replicate the tone and style of a user’s voice using minimal training samples.

- AI-powered speech prosthetics are now aiding individuals with ALS or vocal disorders.

- Also used in accessibility tools like audio captions, reading aids, and speech-to-speech translators.

Read Also: How to navigate challenges in business with generative AI

Factors influencing the Cost of Building a Generative Audio Model

Developing a simple autoencoder or GAN for basic audio generation is more affordable than building transformer-based models like Jukebox or MusicLM that require extensive training and data.

The quality and size of your training dataset play a crucial role. Licensing high-quality audio data or curating custom datasets adds to the cost.

Training generative models, especially those involving WaveNet, Diffusion Models, or Transformer architectures requires powerful GPUs or TPUs, which directly impacts infrastructure cost.

Fine-tuning a pre-trained model for specific genres, languages, or voice styles can save costs, but building models from scratch with proprietary features significantly increases development time and budget.

Costs also rise if you need seamless integration with web/mobile apps or backend services, plus post-deployment support and optimization.

- Prototype/MVP: $8,000 – $20,000

- Custom Mid-Level Model: $25,000 – $60,000

- Enterprise-Grade Audio Generation System: $75,000 – $150,000+

Let our experts help you craft next-gen generative soundscapes.

Technology Behind Generative Audio Models

The success of generative audio models lies in a solid foundation of cutting-edge technologies, ranging from neural networks and deep learning to scalable machine learning frameworks and cloud-based GPU infrastructures.

Let’s explore each layer of this tech stack that fuels AI audio generation.

Neural networks are the building blocks of AI audio generation models, enabling machines to process and generate time-based audio sequences with realistic fidelity.

- Recurrent Neural Networks (RNNs) are great at handling sequential audio data like speech or music.

- Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) allow the model to retain important audio context across longer sequences.

- Convolutional Neural Networks (CNNs) are used for audio classification tasks by analyzing spectrogram images.

- Transformer models with attention mechanisms are increasingly popular for their ability to capture both local and global patterns in audio.

Deep learning enables generative audio models to learn complex patterns and structures within raw audio signals and spectrograms.

- Deep architectures can analyze both time-domain waveforms and frequency-domain representations (like Mel spectrograms).

- Autoencoders and Variational Autoencoders (VAEs) help learn compressed representations of sound data for efficient generation.

- Generative Adversarial Networks (GANs) are widely used for high-quality, creative sound synthesis in both speech and music.

- Models learn hierarchical features of audio, capturing elements like rhythm, pitch, and phoneme structures.

At the core of every generative audio system is a well-structured machine learning audio model, trained using powerful open-source frameworks.

- TensorFlow and PyTorch are the most popular libraries for training and deploying custom audio models.

- Librosa is often used for audio preprocessing, while Hugging Face Transformers supports model fine-tuning for audio tasks.

- Training datasets like LibriSpeech, Common Voice, or NSynth offer high-quality audio samples for diverse applications—from voice cloning to music generation.

- Models are optimized using loss functions like MSE (Mean Squared Error), Spectrogram Loss, or Adversarial Loss for GANs.

To train complex AI audio generation models, access to cloud resources and GPU acceleration is essential. These infrastructures handle large-scale computation for faster and more efficient training.

- Platforms like Google Cloud AI, AWS SageMaker, and Azure ML offer scalable environments for model development and deployment.

- NVIDIA GPUs and TPUs are used for high-speed processing of spectrogram data and deep neural network layers.

- For real-time generation, edge AI or cloud-based APIs deliver on-demand audio services like voice synthesis or music streaming.

- Distributed training strategies like data parallelism or model parallelism help scale training across multiple GPUs.

We specialize in neural voice synthesis and natural language sound. Scalable, accurate, and production-ready voice models.

Real-World Use Cases of Generative Audio Models

Alexa’s Enhanced Voice Using AI Voice Synthesis

Amazon uses Generative Audio Models to power Alexa, their smart voice assistant, with more expressive and personalized voice interactions.

- By integrating neural networks for sound (like Tacotron 2 and WaveGlow), Amazon created Alexa’s Newscaster Voice that adjusts tone and intonation based on content type, like reading news or giving weather updates.

- In the healthcare sector, Amazon Alexa is HIPAA-compliant and can use AI voice synthesis to deliver personalized medical reminders or patient care instructions with clarity and empathy.

These enhancements are powered by cloud-based machine learning audio models trained on diverse voice datasets to ensure natural dialogue and contextual fluency.

Personalized Music Recommendations with AI-Generated Audio

Spotify, a global leader in music streaming, is experimenting with AI audio generation tools to personalize user listening experiences beyond just recommendations.

- Through their acquisition of startups like Sonantic, Spotify is exploring AI-generated voice narration for podcasts and dynamic audio ads, bringing human-like storytelling at scale.

- They are also testing AI-generated background scores for mood-based playlists using deep learning for audio synthesis, allowing users to discover custom-made instrumental tracks tailored to their preferences and emotions.

These models leverage sequence modeling and attention mechanisms to predict what audio patterns best fit user taste.

MusicLM for AI-Generated Music Composition

Google has developed MusicLM, a music generation AI model that creates high-fidelity music tracks from textual prompts.

- The model uses Transformer-based architectures trained on large-scale audio-text datasets to generate music that matches input descriptions like “relaxing jazz with a piano solo” or “90s-style hip-hop beat.”

- MusicLM goes beyond simple melody creation, it synthesizes full compositions with structure, rhythm, and tonal consistency, opening new doors for music production tools and content creators.

This showcases how deep learning for audio can turn textual creativity into rich, studio-grade compositions.

Audio2Face for Real-Time Character Lip Sync

NVIDIA is leveraging machine learning audio models in its Audio2Face tool, used in game development and animation.

- Audio2Face uses deep neural networks to map audio input to realistic 3D facial animations in real time, perfect for games, metaverse avatars, and cinematic productions.

- Studios using NVIDIA Omniverse integrate AI-generated voice and expression to automate character animation without manual rigging.

The system processes audio waveforms through pretrained models to drive facial expressions, syncing emotion and voice naturally.

How Sunrise Technologies Helps with Generative Audio Models

We empower businesses to unlock the full creative and commercial potential of generative audio models through tailored, end-to-end AI solutions. You can just create immersive soundscapes, hyper-personalized audio experiences, or implement a cutting-edge AI speech recognition system, as we provide the tools and expertise to make it happen.

We craft custom AI app development strategies focused on real-world audio challenges and business outcomes.

- Custom AI App Development: From music generation to voice cloning, we architect and train models using deep learning for audio that’s fine-tuned to your data and objectives.

- AI App Development Expertise: Leveraging industry-leading frameworks like TensorFlow and PyTorch, our team designs scalable audio systems that integrate seamlessly into your digital ecosystem.

- Scalable AI Development Infrastructure: Our solutions are cloud-deployable, powered by GPU-accelerated training pipelines that optimize performance and speed.

- AI Speech Recognition System Integration: Enhance your products with intelligent voice interfaces or transcription engines that combine generative modeling with real-time recognition capabilities.

Future of Generative Audio Models

As AI continues to redefine the audio tech landscape, the future of generative audio models is headed toward hyper-realism and real-time adaptability.

- Expect real-time voice synthesis in gaming, education, and virtual environments.

- Autonomous music generation AI could soon power on-the-fly background scores in streaming platforms and AR/VR worlds.

- With improvements in AI development, we’ll see tighter integration between generative audio models and AI speech recognition systems, enabling smarter conversational interfaces.

From automated sound design to AI-generated voiceovers, generative audio models are transforming how we create and experience sound.

Partnering with a top AI App Development company like Sunrise Technologies ensures that your journey into AI app development solutions is backed by technical excellence and strategic insight. With advanced AI development methods and a focus on building tailored, scalable solutions, we help you shape the future of sound, one neural network at a time.

Create intelligent soundscapes with deep learning and generative AI. We build tailored models for composers, developers, and startups.

To create a generative audio model with deep learning, you’ll need a large dataset of audio, preprocess it into a format suitable for training (such as spectrograms), choose a model like GANs or RNNs, and train it using a machine learning framework. Explore our Generative AI Development Services to learn more or get in touch with our experts today.

The development cost of a generative audio model varies based on the complexity, training data volume, and the choice of AI model (e.g., WaveNet, Jukebox, or DiffWave). On average:

- Basic prototype (using open-source models + minimal tuning): $10,000–$25,000

- Custom-trained mid-range model (with dataset collection & tuning): $30,000–$75,000

- Enterprise-level model (custom datasets, multi-language support, real-time synthesis): $100,000+

AI voice synthesis uses neural networks for sound to replicate human speech patterns by training on voice data. These models can generate realistic human voices for virtual assistants and other applications.

You can use machine learning for audio synthesis by training generative audio models on large audio datasets, leveraging architectures like RNNs or GANs to generate realistic audio content like music or speech.

The future of generative audio models is focused on improving the realism and creativity of AI-generated content, with applications in music, voice synthesis, and sound design continuing to grow across industries.

Sam is a chartered professional engineer with over 15 years of extensive experience in the software technology space. Over the years, Sam has held the position of Chief Technology Consultant for tech companies both in Australia and abroad before establishing his own software consulting firm in Sydney, Australia. In his current role, he manages a large team of developers and engineers across Australia and internationally, dedicated to delivering the best in software technology.