Multimodal AI: How It Works and Real-World Use Cases

May 21, 2025

Multimodal AI is redefining how we interact with data by interpreting and merging text, images, audio, and sensor inputs into one unified understanding. From computer vision to natural language processing (NLP), businesses are increasingly adopting multimodal systems to interpret, analyze, and respond to data more intelligently and contextually.

- According to Grand View Research, the global AI market is projected to hit USD 733.7 billion by 2027, with multimodal AI standing out as one of its fastest-growing segments.

- According to Gartner, by 2025, around 60% of enterprises will be deploying multimodal AI models to elevate decision-making up from just 20% in 2023.

This momentum reflects the growing demand for intelligent systems capable of simultaneous multi-modal data processing, crucial for applications like autonomous vehicles, personalized healthcare, and smart retail environments. Let’s dive into how multimodal AI is transforming industries and what makes it a must-have for the AI-first future.

What is Multimodal AI and Real-World Examples

Multimodal AI refers to AI systems capable of understanding and processing information from multiple data modalities, such as text, image, video, audio, and sensor input. Unlike traditional unimodal models that rely on a single type of data, multimodal models merge diverse data streams to derive deeper insights.

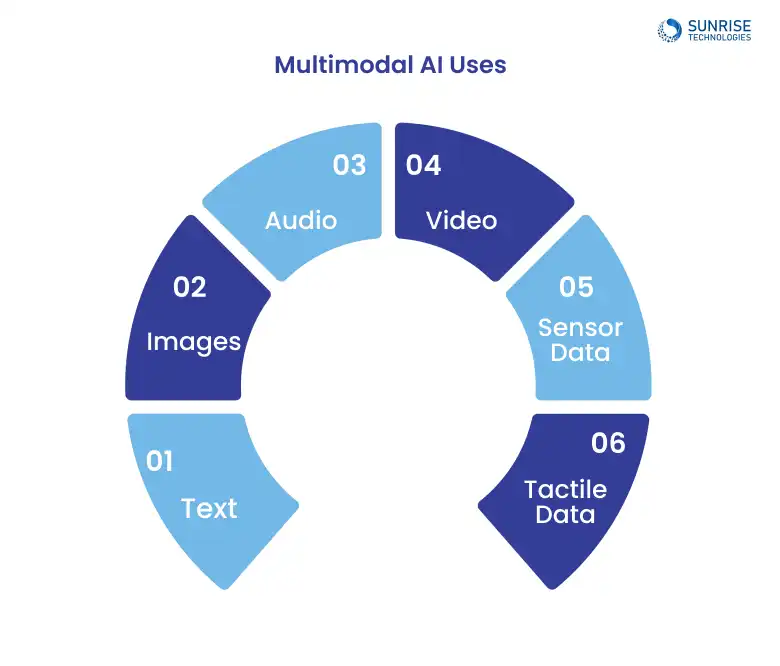

Different forms of data include:

- 1. Text: Written language, documents, captions.

- 2. Images: Static visual data, photographs.

- 3. Audio: Sound waves, speech, music.

- 4. Video: Sequences of images with accompanying audio.

- 5. Sensor Data: Time-series data from various sensors (e.g., temperature, motion).

- 6. Tactile Data: Information related to touch and physical interaction.

Instead of analyzing each modality in isolation (like traditional unimodal AI models), Multimodal AI aims to find relationships and correlations between these different forms of data to gain a richer, more comprehensive understanding.

Real-world examples:

- In healthcare, systems that combine X-ray images with doctors’ notes for diagnosis.

- In retail, AI that interpret customer speech along with their facial expressions for better service.

- In autonomous driving, vehicles use computer vision, radar, and natural language processing (NLP) for decision-making.

Generative AI vs. Unimodal vs. Multimodal: A Comparison

AI models come in different flavors, each designed to solve specific problems. It starts from the specialized approach of how is multimodal AI different from unimodal AI?, and understanding their differences can help you choose the right tool for your needs. Here’s a breakdown to guide you.

| Feature | Unimodal AI | Generative AI | Multimodal AI |

|---|---|---|---|

| Data Input Type | Single modality | Often text or image | Multiple modalities (text + image + audio + more) |

| Output Generation | Predictive or classification | Content generation (e.g., text, images, videos) | Predictive + Content + Correlated insights |

| Real-World Applications | Basic chatbots, image classifiers | ChatGPT, DALL·E, Music Generation | Smart assistants, healthcare diagnostics, autonomous vehicles |

| Learning Style | Traditional, single modality-focused | Self-supervised, learning from data generation | Cross-modal learning; integrates different data types for context |

| Key Strengths | Focused and precise in specific tasks | Creativity in generating new content based on patterns | Enhanced decision-making through data fusion across modalities |

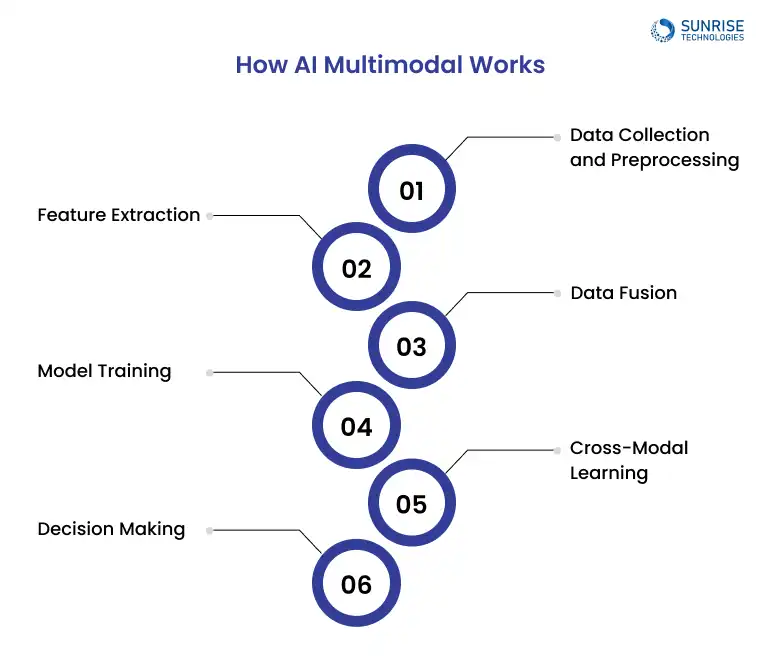

How AI Multimodal Works

Understanding multimodal AI for enhanced machine learning begins with fusing data types using advanced algorithms. So, let’s find out how Multimodal AI works with NLP and Cross-Modal Learning for improving performance in data interpretation.

1. Data Collection and Preprocessing

Each modality (text, image, video, audio, etc.) is collected and preprocessed to normalize formats, reduce noise, and extract relevant features from raw data.

2. Feature Extraction

Using deep learning architectures, such as CNNs for images and transformers for text, features are extracted to represent the core semantics of each modality.

3. Data Fusion

Multimodal data is integrated using early, late, or hybrid fusion techniques, aligning information from different sources into a unified representation space.

4. Model Training

The fused data is used to train models capable of understanding and generating cross-modal outputs, leveraging NLP and vision models for enhanced learning.

5. Cross-Modal Learning

The trained model learns inter-modality relationships—for instance, aligning textual sentiment with facial expressions or visual scenes.

6. Decision Making

With enriched understanding, the system performs complex tasks like predictions, classifications, or generative outputs across modalities with higher accuracy.

This fusion technique improves machine learning accuracy and enables more natural, human-like AI interactions.

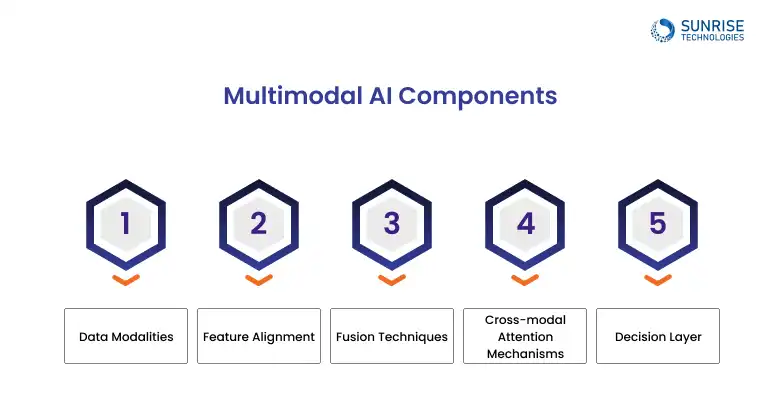

Core Components of a Multimodal AI System

Multimodal AI systems leverage multiple data types to offer deeper insights and better decision-making. These components combine text, image, audio, and sensor data to improve AI understanding.

Data Modalities

Multimodal AI works with data from text, audio, images, video, and sensor data. This variety allows AI systems to build a more comprehensive view of a given situation.

Feature Alignment

AI models like CNNs for images and transformers for text extract key features. This step isolates the most important information for deeper analysis.

Fusion Techniques

Fusion combines data from various modalities to create a unified representation. Early fusion merges data at the input stage, while late fusion combines final predictions.

Cross-modal Attention Mechanisms

Cross-modal attention mechanisms allow AI to prioritize data from different modalities. This helps the model focus on the most relevant input for accurate analysis.

Decision Layer

The decision layer synthesizes all inputs to generate insights. It processes the aligned data and provides the AI’s final prediction or action.

Technical Pillars of Multimodal AI and ML

Multimodal AI is built upon advanced foundations in artificial intelligence and machine learning that enable it to process and learn from multiple types of data like text, images, video, and audio simultaneously.

Multimodal Artificial Intelligence:

This refers to AI systems that can interpret and generate outputs using multiple data modalities. From virtual assistants that understand voice and gestures to AI copilots in smart vehicles, multimodal artificial intelligence is at the core of next-gen interaction.

Multimodal Machine Learning:

This branch of ML focuses on designing models that can learn from multiple input types. It allows machines to find correlations and insights by fusing heterogeneous data sources.

Multimodal Deep Learning:

By leveraging deep learning architectures like CNNs and RNNs across modalities, multimodal deep learning enables more accurate pattern recognition, enhanced context understanding, and richer data representations.

Multimodal Neural Networks:

These specialized neural networks are designed to integrate multiple inputs into a single cohesive understanding, enabling applications like image captioning, audio-visual speech recognition, and emotion analysis in videos.

Natural Language Processing (NLP)

NLP helps multimodal AI understand and generate human language, making sense of text alongside visuals and sound.

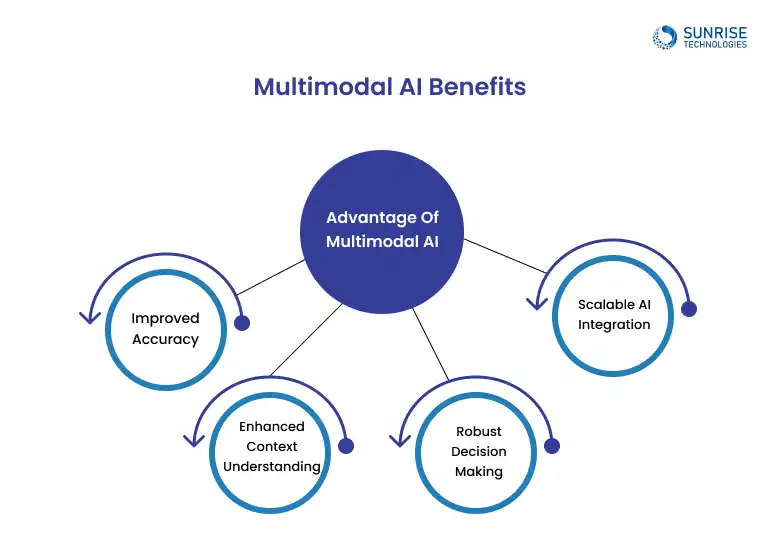

Multimodal AI combines different data types to unlock a richer, more accurate understanding of complex scenarios. Let’s find out the benefits of AI multimodal for data processing, and how it’s changing the way we process and act on information.

- Improved Accuracy Blending multiple data sources like text, vision, and audio minimizes the risk of blind spots. For instance, combining visual cues with speech in video analysis helps AI grasp the full context and reduce interpretational errors.

- Enhanced Context Understanding Multimodal models can “see, hear, and read” simultaneously, enabling deeper comprehension. This is especially critical in customer support or medical diagnostics, where cross-referencing symptoms, visuals, and voice input leads to more precise responses.

- Robust Decision-Making When one data stream is noisy or missing, others can fill in the gaps. This redundancy builds resilience, allowing AI systems to continue functioning effectively even under imperfect conditions.

- Scalable AI Integration Multimodal AI frameworks are adaptable and can slot into existing data ecosystems. With APIs and modular architectures, businesses can scale AI solutions across departments without reengineering everything from scratch.

Use them both with Multimodal AI-powered solutions for a seamless, intelligent experience.

Top AI Multimodal Models to Know

Multimodal AI is advancing rapidly, with cutting-edge models that fuse vision, language, and even audio to replicate human-like understanding. Here are some of the standout models leading the charge:

| Model | Developer | Description | Key Capabilities |

|---|---|---|---|

| GPT-4 (Multimodal) | OpenAI | Multimodal version of GPT-4 capable of understanding both text and images. | Advanced text reasoning, image understanding, cross-modal tasks |

| CLIP | OpenAI | Learns visual concepts from natural language through contrastive pre-training. | Connects text and images, enables zero-shot classification |

| DALL·E | OpenAI | Generates images from textual prompts using a transformer-based architecture. | Text-to-image generation, creative content production |

| VisualBERT | Allen Institute | Integrates visual features into BERT for joint image-text representation learning. | Visual question answering, image-based reasoning |

| Florence | Microsoft | A large-scale multimodal foundation model trained on extensive image-text data. | Image captioning, visual grounding, object recognition |

| Flamingo | DeepMind | A few-shot multimodal learner that processes image and text sequences efficiently. | Visual QA, few-shot learning, minimal fine-tuning required |

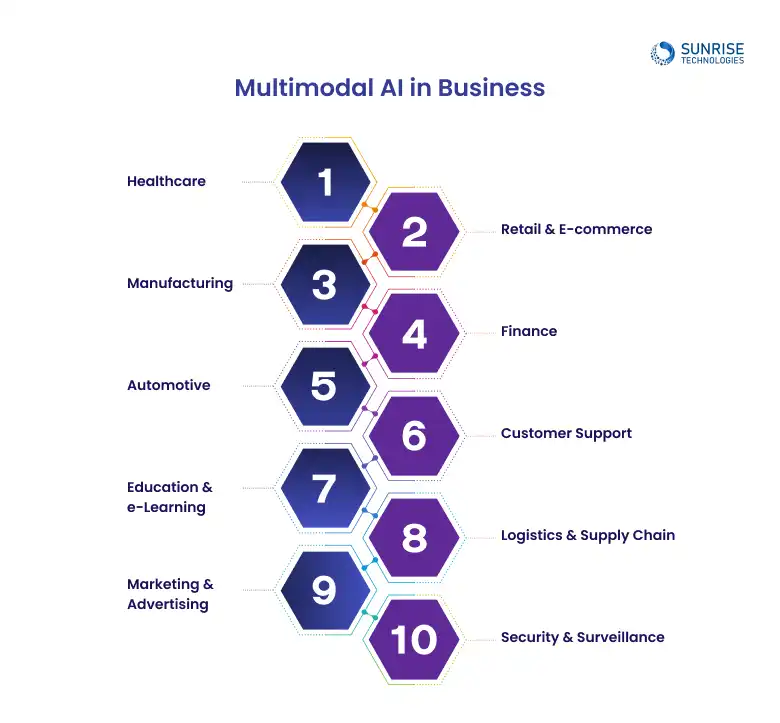

Top 10 Applications and Real-World Examples of Multimodal AI in Business

Startups and enterprises alike are tapping into Multimodal AI use cases to revolutionize user experience, automate workflows, and gain competitive edges.

Multimodal AI in healthcare combines medical imaging, electronic health records (EHR), doctor’s notes, and even patient voice inputs to deliver more accurate diagnoses and proactive care. By integrating structured and unstructured data sources, AI systems gain a holistic understanding of the patient’s condition, improving clinical decision-making and patient outcomes.

Examples: PathAI uses deep learning models like CNNs (Convolutional Neural Networks) to analyze pathology images along with clinical notes to detect diseases such as breast cancer and liver conditions with higher accuracy.Google DeepMind’s AlphaFold also combines biological sequence data with structural data to predict protein folding, transforming drug discovery pipelines.

AI Multimodal in retail creates smarter shopping journeys by fusing product visuals, user-generated content, natural language reviews, and customer browsing behavior. It powers everything from visual search and AR try-ons to personalized product recommendations and intelligent virtual assistants.

Example: Amazon employs CLIP (Contrastive Language–Image Pretraining) by OpenAI to match image uploads with text-based search queries, powering visual search and product recommendations. Zalando integrates BERT (Bidirectional Encoder Representations from Transformers) with customer preferences and outfit images to drive AI-driven fashion stylists that suggest outfits based on real-time user behavior.

Multimodal AI enhances manufacturing by processing visual inspection footage, machine sensor logs, and technician input to detect anomalies, track production efficiency, and predict equipment failures. It enables quality assurance at scale while reducing human dependency for repetitive monitoring.

Example: Bosch and Siemens use YOLOv5 (You Only Look Once) for real-time defect detection via video inspection, paired with LSTM (Long Short-Term Memory) networks to forecast machinery faults based on vibration and temperature sensor data. GE’s Predix platform integrates multimodal sensor data and uses XGBoost models to detect anomalies and improve predictive maintenance in industrial settings.

Multimodal AI in finance, analyzes numerical data, financial reports, economic news, and social media sentiment to create a full-spectrum view of market trends and risk assessment. It empowers better forecasting, fraud detection, and algorithmic trading with richer context.

Example: BloombergGPT, a large language model fine-tuned for finance, processes news articles and market data to support analysts. Darktrace uses Bayesian Networks and Transformer-based architectures to detect fraud by combining transactional patterns with user voice and behavioral biometrics.

Multimodal AI is key in advancing autonomous and smart vehicles. It processes data from cameras, LiDAR, GPS, voice assistants, and driver monitoring systems to support decision-making, enhance navigation, and provide real-time safety feedback.

Example: Tesla Autopilot leverages HydraNet, a proprietary multimodal neural network architecture that processes video, radar, and driver inputs. NVIDIA DRIVE uses Fusion Transformer networks to combine camera feeds, voice inputs, and 3D mapping for real-time decision-making in autonomous vehicles.

Customer service benefits immensely from multimodal AI by combining text, voice, emotion, and even visual data (like screen sharing) to understand customer intent and sentiment. This enables smarter chatbots, empathetic support, and better escalation protocols.

Example: IBM Watson Assistant uses Natural Language Understanding (NLU), Tone Analyzer, and Visual Recognition APIs to merge call transcripts, emotion tones, and on-screen interactions for smarter support automation. Cognigy and Kore.ai utilize models like OpenAI’s GPT, fused with voice-to-text engines and facial emotion detectors, to automate up to 70% of customer interactions with conversational accuracy and contextual escalation paths.

Multimodal AI in Education, is transforming learning platforms by analyzing text input, facial expressions, eye tracking, voice pitch, and writing styles to personalize educational content. It detects when students are disengaged or confused, then adapts content in real time.

Example: Duolingo leverages DeepSpeech (by Mozilla) for speech recognition and pronunciation analysis, along with response timing and typing accuracy to adjust difficulty levels. Carnegie Learning’s MATHia platform incorporates Multimodal Learning Analytics (MMLA) frameworks and facial recognition via OpenFace to monitor engagement and personalize lesson delivery dynamically.

In logistics, multimodal AI synchronizes real-time data from vehicle GPS, traffic footage, warehouse sensors, and inventory systems. This allows businesses to optimize delivery routes, forecast demand accurately, and detect supply chain bottlenecks before they escalate.

Example: FedEx utilizes Amazon SageMaker with object detection models (like YOLOv5 and ResNet) to analyze facility footage while syncing it with barcode scanners and IoT sensor inputs. Walmart deploys Google’s Vertex AI Vision alongside BigQuery ML to process shelf camera footage, inventory logs, and supplier data—automatically initiating restocking for high-demand items.

Marketing teams use multimodal AI to understand audiences by analyzing video ads, social media captions, emojis, facial reactions, and engagement metrics. It helps identify emotional triggers, measure ad effectiveness, and personalize campaigns.

Example: Coca-Cola partnered with Realeyes, a multimodal AI platform using Computer Vision and Emotion AI (Facial Coding), to evaluate emotional responses during ad testing. TikTok’s recommendation engine combines ByteDance’s MLP + Transformer-based model with audio, video, and textual engagement to personalize ad delivery at massive scale.

In the security industry, multimodal AI enhances surveillance systems by combining audio feeds, video footage, motion sensors, and behavioral data. It provides a contextual layer that helps differentiate between normal activity and enhanced cybersecurity.

Example: Changi Airport uses AnyVision (now Oosto) for multimodal face recognition combined with audio classification models to detect aggression or abandoned objects. Smart city surveillance systems in cities like Singapore use Microsoft Azure Video Analyzer and Edge AI models like OpenVINO to fuse motion detection, sound analysis, and behavioral modeling for real-time anomaly detection.

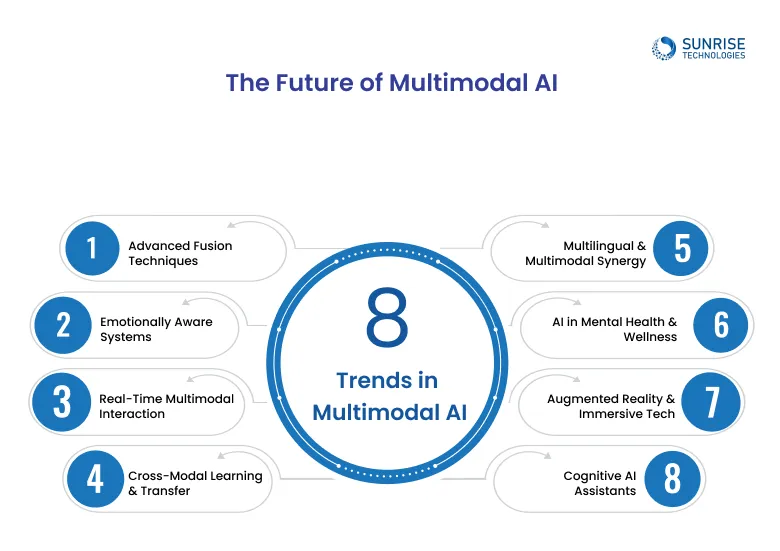

The Future of Multimodal AI

Unlike traditional unimodal systems that rely on a single type of data, multimodal AI is evolving to fuse diverse inputs using sophisticated alignment and attention mechanisms, enabling richer, context-aware, and emotionally responsive experiences.

Thanks to breakthroughs in cross-modal transformers, zero-shot learning, and real-time inference at the edge, we’re entering an era where AI will no longer just process information—it will interpret emotion, intent, and context across formats.

Future models will use dynamic fusion algorithms to better align data from multiple modalities in real time. These adaptive techniques will drastically improve accuracy in complex environments.

AI will evolve to detect emotions from tone, facial cues, text patterns, and physiological signals. This opens up emotionally intelligent assistants and more empathetic digital experiences.

Expect lower latency and seamless interaction across text, speech, and video using edge-based multimodal AI. This will empower live, context-aware responses in everything from customer service to healthcare.

Cross-modal models will be able to learn from one data type and perform tasks in another—like using vision data to generate text or vice versa enabling more generalizable AI.

AI will soon blend multilingual understanding with multimodal inputs, helping it perform translations, summarizations, and conversations across both languages and media types.

Multimodal AI will be used to assess mental health through voice tone, facial expression, text input, and even posture or biometric data enabling non-invasive early detection and support.

Multimodal AI will power AR/VR by combining spatial data, gestures, voice, and visuals for hyper-personalized, responsive, and intuitive immersive experiences.

Next-gen digital assistants will use multimodal inputs to understand not just commands, but context, supporting proactive help, task anticipation, and personalized interactions.

Why Sunrise Technologies is Your Multimodal AI Partner

Businesses are flooded with diverse forms of data like text, images, audio, video, sensor signals, and Sunrise Technologies stands at the forefront of building intelligent systems that don’t just process data, but understand it holistically.

We specialize in designing and deploying multimodal AI solutions that combine the power of machine learning, computer vision, natural language processing (NLP), and even sensor analytics bringing together disparate data streams into a unified intelligence pipeline.

- Proven Expertise in AI Integration With a strong track record of AI development across verticals, we excel in fusing AI into your existing digital ecosystem, for a CRM, ERP, cloud infrastructure, or edge devices.

- Domain-Specific Multimodal Deployments We tailor multimodal AI models that are finely tuned to your industry, ensuring higher accuracy, deeper insights, and real-time decision-making aligned with domain workflows.

- Scalable, Customizable, and Enterprise-Ready We deliver modular architectures that scale with your business. You're looking for cloud-based AI, on-premise intelligence, or edge computing models, we build solutions that evolve with your needs.

Relying on just one type of data is no longer enough, especially when your business decisions demand nuance, context, and real-time responsiveness. Multimodal AI breaks these limitations by combining visual perception, natural language understanding, and signal interpretation into one cohesive, intelligent system.

The result? Higher prediction accuracy, more natural human-AI interactions, and smarter automation that adapts to complex environments.

Partnering with a leading AI development company like Sunrise Technologies empowers your business to go beyond traditional AI. With our expertise in building scalable, cross-modal architectures and industry-specific AI models, your multimodal solution becomes a true competitive differentiator.

Let Sunrise Technologies be your trusted partner in custom AI application development services for navigating this exciting frontier, helping you build intelligent solutions that see, hear, read, and ultimately, understand the world, and your business in a whole new light.

Revolutionize how your enterprise operates with AI that understands context, reacts in real time, and adapts to every input like a digital genius.

From healthcare diagnostics to retail shopping assistants and smart surveillance, real-world applications of multimodal AI in business are vast and growing daily.

By aligning features across different data types, multimodal AI improves cross-modal learning, enabling the system to draw relationships between speech, image, and other inputs for more accurate predictions.

Multimodal AI enhances context understanding, reduces bias, and ensures robust decision-making through cross-modal learning and diverse data integration.

Natural language processing (NLP) and computer vision are core components in multimodal AI, allowing systems to understand both language and visuals contextually and simultaneously.

From improving diagnostics in healthcare to enhancing security in finance and customer engagement in retail, multimodal AI is transforming industries through widespread adoption and innovation.

Sam is a chartered professional engineer with over 15 years of extensive experience in the software technology space. Over the years, Sam has held the position of Chief Technology Consultant for tech companies both in Australia and abroad before establishing his own software consulting firm in Sydney, Australia. In his current role, he manages a large team of developers and engineers across Australia and internationally, dedicated to delivering the best in software technology.